Blackbox AI is a type of artificial intelligence that is powerful and often very accurate. We can see the data that goes in and the answer that comes out. The decision-making process in the middle, however, is hidden from view.

Understanding What Blackbox AI Is

Imagine you have a magic box. You put a complex question in one side, and a correct answer comes out the other. You do not know how it found the answer, but it did. This is how a Blackbox AI works. These systems use complex models like deep learning neural networks. These models have so many layers that even their creators cannot trace a decision’s exact path.

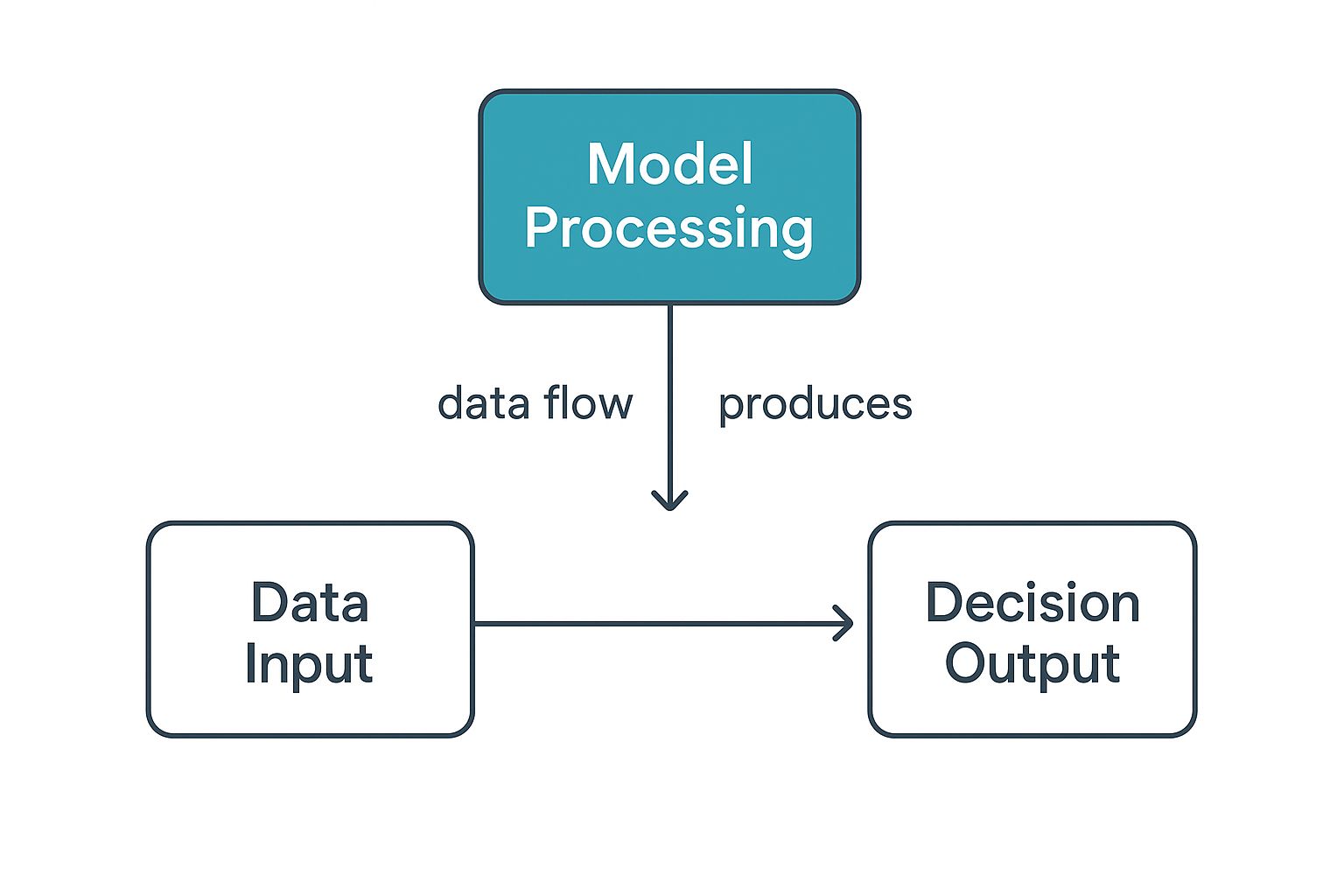

This simple infographic explains the concept:

We control the input data and get a clear output. The middle “processing” stage inside the model remains a mystery. This lack of visibility defines Blackbox AI. It creates both exciting opportunities and real challenges for businesses.

Blackbox AI vs. Whitebox AI Comparison

It helps to compare Blackbox models to their transparent counterparts, called “whitebox” AI. Blackbox systems focus on performance. Whitebox models are designed for clarity, so you can easily follow their logic.

Here is a breakdown of the main differences:

| Feature | Blackbox AI (e.g., Deep Neural Networks) | Whitebox AI (e.g., Decision Trees) |

|---|---|---|

| Transparency | Opaque; internal workings are not understandable to humans. | Transparent; the decision-making process is clear and easy to follow. |

| Complexity | High; involves millions or even billions of parameters. | Low to moderate; based on simple, explainable rules. |

| Performance | Typically very high accuracy, especially with complex data. | Generally lower accuracy, especially on unstructured data like images. |

| Use Cases | Image recognition, natural language processing, autonomous driving. | Credit scoring, fraud detection, medical diagnosis where explainability is crucial. |

| Interpretability | Very difficult; requires specialized techniques to approximate explanations. | Very easy; you can literally trace the path from input to output. |

The choice between a Blackbox and a whitebox model involves a trade-off. You must decide if you need the best performance or if you need to understand the AI’s decision. Whitebox models are often the safer choice in high-stakes fields like finance. Blackbox AI is often the best option for tasks where predictive power is most important.

How Blackbox Models Learn and Make Decisions

This learning is often driven by deep learning, which uses structures called neural networks. You can think of these as a digital version of the human brain’s network of neurons. This design helps the AI find subtle patterns in data that a person might miss.

It all begins with a phase called training. An image recognition model might see millions of labeled pictures. For each image, the AI makes a guess and checks its answer against the label.

It adjusts its internal wiring every time it is wrong. These “wires,” or parameters, can number in the millions or billions. This cycle of guessing, checking, and adjusting helps the model create its own logic for future decisions.

The Training Process Unpacked

The “black box” is built during the training phase. The AI tunes its billions of parameters. It creates a web of calculations so dense that a human cannot follow a single input to its output.

Here is an analogy: You can teach a toddler what a cat is by showing them many photos. The toddler can then identify a cat they have never seen before. If you ask them to explain how they knew, they would struggle. They just know. A Blackbox model works in a similar but much more complex way.

A key takeaway is that Blackbox models develop their own problem-solving logic. They do not follow a pre-written script but apply patterns learned from training data.

This self-developed logic makes them very powerful. They can handle new, unseen information because they have generalized patterns instead of just memorizing examples.

From Learning to Decision-Making

The model is ready to work after training. It processes new data through its network of learned parameters. This happens very quickly to provide a prediction, classification, or recommendation. For those curious about creating these systems, learning how to build AI agents for beginners can offer a good foundation.

The model’s final decision is a highly educated guess. It is based on the statistical probabilities it found during training.

Here is a simplified look at the flow:

- Input Data: The model receives new information, like text, an image, or financial data.

- Internal Processing: The data moves through the trained neural network’s layers, causing millions of small calculations.

- Output Generation: The model produces an outcome, such as translating text or flagging a transaction.

This efficiency allows Blackbox AI to power real-time applications. These include moderating online content and guiding autonomous vehicles. Its ability to make fast, accurate judgments comes from its deep, data-driven learning.

Real-World Examples of Blackbox AI in Action

Blackbox AI is already a part of our daily lives. You likely interact with these systems many times a day. They are the invisible engines behind many modern services.

Consider the recommendation engine on a streaming service. It uses your viewing history and what other people with similar tastes enjoy. The model processes this information and suggests a movie or show. That is a simple example of Blackbox AI.

In Healthcare and Medical Diagnostics

The healthcare industry uses Blackbox AI to analyze medical images with high precision. A model can review thousands of X-rays or MRIs to find early signs of disease. The scan is the input, and the output is an area for a radiologist to check.

These systems can often find very subtle patterns that a human might miss. Their accuracy sometimes meets or exceeds that of human experts. This is a powerful, opaque process that delivers life-saving results.

The growth in this area is significant. The overall AI market is expected to reach about $243.7 billion by 2025. This growth comes from adoption in key sectors like healthcare. You can see the complete market size statistics for more details.

In Finance and E-Commerce

In finance, Blackbox AI is used for fraud detection and high-frequency trading. An AI can monitor millions of transactions in real-time. The transaction data goes in, and an immediate “block this purchase” alert can stop fraud.

These AI systems protect consumers and financial institutions from large losses. They analyze patterns too complex and fast for human teams. They operate at a speed and scale that is not possible with manual oversight.

E-commerce uses Blackbox AI for dynamic pricing and inventory management.

Here are a few ways it is used:

- Personalized Shopping: Websites create a product feed for you based on what you have viewed and bought.

- Customer Service Chatbots: AI bots answer common customer questions, which frees up human agents.

- Supply Chain Optimization: These models predict product demand to help retailers avoid stock shortages.

Each of these examples shows a similar process. We provide the input and get a valuable output. We trust the model’s hidden logic to connect them. The results are powerful, even if the “how” is a mystery.

The Key Benefits of Blackbox AI Models

So, why would anyone use a Blackbox model if we do not know how it works? The simple answer is performance.

Blackbox AI is often the best tool when you need raw accuracy and power. These models are very good at finding complex patterns in data. These patterns are often invisible to human experts. This ability allows them to make predictions with a high degree of precision.

An AI can beat a chess grandmaster by recognizing deep strategic patterns. A human player might not even think of these patterns. This superior performance comes from the model’s complex, hidden workings. It can achieve results that simpler, more transparent models cannot.

Unmatched Speed and Efficiency

Another major benefit is the speed of Blackbox AI. These systems can process huge datasets and make decisions in real-time. They operate at a scale and speed that humans cannot match. This makes them ideal for tasks where every millisecond is important.

Online advertising is a classic example. A Blackbox model can analyze millions of user data points instantly. It decides which ad is most relevant to you at that moment. This fast decision-making makes the ad more effective.

This efficiency leads to business success. Blackbox.ai, a platform for developers, became profitable with a small team. It reached $31.7 million in annual revenue with just 180 employees. This highlights the power of automation. You can read the full usage statistics on wearetenet.com to learn about its global adoption.

Automation of Complex Tasks

Blackbox AI also automates tasks that are very complex. You can hand these jobs to an AI. This frees up your team to focus on strategy, creativity, and problem-solving.

This is happening in many industries today.

- Customer Support: AI chatbots handle common questions, so human agents can solve harder problems.

- Manufacturing: AI models monitor production lines to find small defects and improve quality control.

- Content Creation: Some AI tools for business growth can create reports from large datasets, saving analysts time.

Blackbox AI takes over these complex tasks. It allows organizations to run more smoothly and use their human talent better. It is a powerful tool for increasing productivity.

The benefits of Blackbox AI are clear. The combination of accuracy, speed, and automation delivers real results. For many applications, these advantages are too strong to ignore.

Understanding the Risks and Ethical Concerns

The main problem with Blackbox AI is that you cannot see how it thinks. This lack of clarity makes it hard to trust a model’s output. It is also difficult to fix mistakes or understand why it works. This creates serious risks when we let these systems make important decisions.

You can never be sure the model is fair if you cannot see how it works. An AI trained on flawed data can pick up and even increase human biases. This can lead to unfair outcomes in areas like hiring or loan applications. A model might discriminate against someone without anyone knowing why.

The Problem of Hidden Biases

Hidden biases are a serious danger of a Blackbox system. A model may seem to perform perfectly in a test environment. In the real world, it could make decisions based on wrong factors. An AI for screening job applicants might favor candidates from certain zip codes. This could happen because the training data had more successful hires from those areas, not because they were more qualified.

This creates a cycle of unfairness that is hard to spot and fix. The consequences can be severe. They can lead to legal issues and damage a company’s reputation. We see similar issues with other advanced AI. Our guide on the AI image generator explores how these tools also need careful use to avoid biased results.

Regulatory and Security Challenges

The secretive nature of Blackbox models also creates problems with regulations. Many industries have rules that require decisions to be explainable. A Blackbox AI cannot do this. This forces companies to choose between a high-performing model and their legal duties.

These systems can also be vulnerable. Attackers can sometimes change input data in small ways to trick a model. It is a major challenge to identify and defend against these attacks because the model’s logic is a mystery.

A key problem is that when a Blackbox AI makes a mistake, it is almost impossible to find the root cause. You know the final answer was wrong, but you cannot trace the error back through the model’s complex calculations.

The growth of Blackbox AI has led to a push for regulation. The European Union’s AI Act now requires explainability for high-risk systems. This is to fight the dangers of these opaque algorithms. U.S. regulators are also concerned about unchecked AI decision-making. You can find more details about these AI risks and breakthroughs in 2025 on ts2.tech.

The Push for Explainable AI

We rely more on Blackbox AI every day. This makes us realize it is unsettling to trust a decision we do not understand. This concern has led to a push for more transparent systems. This new field is called Explainable AI, or XAI.

XAI aims to look inside these complex models to see what is happening. It is not just about curiosity. It is about building trust and accountability into our tools. Knowing why an AI makes a critical judgment is essential for using it safely.

How XAI Makes Sense of Complex Models

Explainable AI does not simplify powerful models. Instead, it provides techniques that act as interpreters. They translate the AI’s complex logic into something we can follow. Two popular methods are LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations).

These tools are like a detective’s magnifying glass. An AI might deny a loan application. LIME or SHAP can show the clues the model used. It might highlight that a “low credit score” and a “high debt-to-income ratio” were the main reasons.

These tools do not explain the entire model at once. They give you a simple reason for a single decision. This helps you get the “why” without needing a Ph.D. in machine learning.

This is very important. It helps developers find bugs in their models and spot hidden biases. It also helps them give clear reasons for any AI-driven action. We are now moving from just accepting an AI’s answer to understanding its reasoning.

The Global Push for Transparency and Regulation

This is not just a tech trend; it is becoming law. Regulators around the world now see the risks of opaque AI. This is especially true in areas like finance, hiring, and healthcare. They are starting to write rules that require a basic level of transparency.

The EU’s AI Act is a great example. It places strict rules on systems that make major decisions. If a bank uses an AI to reject a loan, the bank must be able to explain why. This regulatory pressure is forcing companies to be more open.

This all points to a large industry shift. The conversation is no longer just about performance. Developers and organizations now look for ways to balance the power of Blackbox AI with the need for it to be explainable and trustworthy.

Got Questions About Blackbox AI?

We receive many questions about Blackbox AI and its real-world uses. Let’s answer some of the most common ones.

The main difference is transparency. A whitebox AI, like a simple decision tree, gives you a clear path. You can follow the logic from start to finish. This makes it easy to understand and fix.

Blackbox models are the opposite. Their inner workings are a mystery. Their results are often very accurate, but a human cannot follow the decision-making process.

Can You Make a Blackbox AI More Transparent?

Yes, you can. This is a major focus in the AI community. The field of Explainable AI (XAI) works to open up these complex systems. Tools like LIME and SHAP analyze a model’s outputs. They find which input factors were most important for a single decision.

These methods do not show the whole process. They give you a good look at the “why” behind a specific result. This is important for building trust and finding hidden biases.

These tools are like interpreters. They translate the AI’s complex math into something a human can understand. They do this without hurting the model’s performance. It is a way to get high accuracy and some understanding.

Which Industries Should Be Most Cautious?

Some fields must be very careful with Blackbox AI. When stakes are high and people’s lives are involved, explaining a decision is a requirement.

A few industries need to be especially cautious:

- Finance: For credit scores or loan applications, an unexplained “no” can lead to accusations of discrimination.

- Healthcare: In diagnostics, every recommendation must be explainable. This is vital for patient safety and for doctors to trust the technology.

- Hiring and HR: Using an opaque model to screen job candidates is a big risk. It is too easy to create a system that reinforces biases.

In these sectors, not being able to explain a decision is a major liability, not just an inconvenience.

Ready to harness the power of AI and automation for your business? creavoid builds intelligent systems and data-driven strategies to fuel your growth. Visit us at https://creavoid.com to see how we can help you scale.